...a clever blog name here...

one day the css for this site will get sorted

Introduction to USBIP

Sometimes it can be very useful to obtain access to a USB device over very large distances. There are various hardware adapters that can be used to run USB over ethernet cables but in the post I will deal with using USBIP on Linux to remote a USB device over internet protocols.

USBIP has been around for a while but seems to be in a confused state at the moment. The linux driver has been moved from the independent project into the Linux kernel and for some time has been placed in the drivers/staging/usbip directory up to kernel version 3.18 where it has moved into the main tree. A further problem is that while a Windows driver exists the linux driver has implemented some protocol changes in version 3.16 which now means the Windows version is no longer compatible. Something else to fix.

However, with a bit of work I have got my Linux Mint machine working with a USB device hosted on an OpenWRT "Chaos Calmer" router.

OpenWRT installation

The chaos calmer version of openwrt includes the kernel modules for usbip in the kmod-usbip,kmod-usbip-server and kmod-usbip-client packages. However, the userland tools are not available in any package. These therefore had to be built manually. This is done by building openwrt to get the toolchain and kernel setup on the cross-compilation machine and then building usbip independently.

# required packages opkg update opkg install kmod-usb-ohci kmod-usbip kmod-usbip-server kmod-usbip-client opkg install udev usbutils

To setup the build environment I followed the OpenWRT instructions, in my case selecting the TL-WR1043ND device specifically so that I get a MIPS toolchain and the kernel gets configured for my device. We need to support udev so that the libudev library and headers and included in the target tree.

To cross-compile the usbip tools, we need to find the sources and configure for cross-compiling. In kernel 3.18 (as used in chaos calmer) the usbip package has migrated to the main kernel tree so this is now found in build_dir/toolchain-mips_34kc_gcc-4.8-linaro_uClibc-0.9.33.2/linux/tools/usb/usbip/. The actual path will vary according to the device selection as this is toolchain dependent. Moving into this directory we must run autogen.sh to setup the configuration utility then run it with a number of additional options to find everything. The following script runs configure with the right options for my device.

#!/bin/bash

BASE=/opt/src/openwrt-chaos

STAGING_DIR=${BASE}/staging_dir

TOOLCHAIN=${STAGING_DIR}/toolchain-mips_34kc_gcc-4.8-linaro_uClibc-0.9.33.2

TARGET=${STAGING_DIR}/target-mips_34kc_uClibc-0.9.33.2

PATH=${TOOLCHAIN}/bin:$PATH

env \

CFLAGS=-I$TARGET/usr/include \

CPPFLAGS=-I$TARGET/usr/include \

LDFLAGS=-L$TARGET/lib \

./configure \

--build=x86_64-unknown-linux-gnu \

--host=mips-openwrt-linux-uclibc \

$*

In particular this sets the --build and --host options to enable cross-compiling and adds the linux include folder for the target device.

Running make then creates the tools in the src/.libs dirctory. We need usbip,usbipd and libusbip.so. These need to be copied to the openwrt device and installed. The library needs placing in /lib with some softlinks creating. The utility files can go in /usr/bin. I also found I had to unpack /usr/share/usb.ids.gz into usr/share/hwdata/usb.ids. It might be nice to arrange the tools to read the compressed files directly in the future.

root@OpenWrt:/tmp# ls -l /lib/*usbip* /usr/bin/*usbip* lrwxrwxrwx 1 root root 17 Feb 4 21:39 /lib/libusbip.so -> libusbip.so.0.0.1 lrwxrwxrwx 1 root root 17 Feb 4 21:39 /lib/libusbip.so.0 -> libusbip.so.0.0.1 -rwxr-xr-x 1 root root 64034 Feb 4 21:39 /lib/libusbip.so.0.0.1 -rwxr-xr-x 1 root root 45715 Feb 6 15:53 /usr/bin/usbip -rwxr-xr-x 1 root root 38300 Feb 6 15:53 /usr/bin/usbipd

I shall have to look into how to setup this as a package, but for now, manual installation will suffice.

Exporting a USB device from OpenWRT

With all the above completed we can use the userland utility to query the available devices and expose them over TCP/IP.

usbip list will list either local or remote devices. We need to use this to identfy the usb bus id for the device to expose.

root@OpenWrt:/tmp# usbip list -l - busid 1-1 (1a86:7523) QinHeng Electronics : HL-340 USB-Serial adapter (1a86:7523)

usbip bind --busid=1-1 is then used to actually expose the device over TCP/IP. usbipd -D must be run at some point to actually deal with the network communications as well.

Linux Mint installation

On the desktop side we need the kernel drivers and the userland tools. The kernel driver modules are included for Mint 17.2 which is using kernel version 3.16. However the usbip utility needs to be built. To do this we need to get the kernel source cloned. For this kernel version the tools are in drivers/staging/usbip. We need to go into the userspace subdirectory and run autogen.sh to generate the configuration script and then run that and make the utilities. I also had to install the libudev-dev package to satisfy the configure script.

sudo apt-get install libudev-dev cd /opt/src git clone https://github.com/torvalds/linux cd linux git checkout v3.16 cd drivers/staging/usbip/userspace ./autogen.sh ./configure make sudo make install sudo ldconfig

With the above commands completed the usbip utility is installed in /usr/local/bin and can now be used to query and connect to remote usb devices. I have an arduino clone on the openwrt device that shows up as a usb-serial adapter.

$ usbip list -r 192.168.1.47

Exportable USB devices

======================

- 192.168.1.47

1-1: QinHeng Electronics : HL-340 USB-Serial adapter (1a86:7523)

: /sys/devices/platform/ehci-platform/usb1/1-1

: Vendor Specific Class / unknown subclass / unknown protocol (ff/00/00)

: 0 - Vendor Specific Class / unknown subclass / unknown protocol (ff/01/02)

Success! This is the correct USB details for a CH340G chip. The usbip tool can also attach to the remote device and connect it into the local system using usbip attach -r ipaddr -b 1-1. This does not produce any output but looking in the dmesg logs we can see that we have a new virtual controller and the remote device has been registered as /dev/ttyUSB1.

[72041.213180] vhci_hcd: USB/IP 'Virtual' Host Controller (VHCI) Driver v1.0.0 [72052.409413] vhci_hcd vhci_hcd: rhport(0) sockfd(3) devid(65538) speed(2) speed_str(full-speed) [72052.648913] usb 5-1: new full-speed USB device number 2 using vhci_hcd [72052.760982] usb 5-1: SetAddress Request (2) to port 0 [72052.782662] usb 5-1: New USB device found, idVendor=1a86, idProduct=7523 [72052.782670] usb 5-1: New USB device strings: Mfr=0, Product=2, SerialNumber=0 [72052.782673] usb 5-1: Product: USB2.0-Serial [72052.821611] usbcore: registered new interface driver ch341 [72052.821625] usbserial: USB Serial support registered for ch341-uart [72052.821636] ch341 5-1:1.0: ch341-uart converter detected [72052.834595] usb 5-1: ch341-uart converter now attached to ttyUSB1

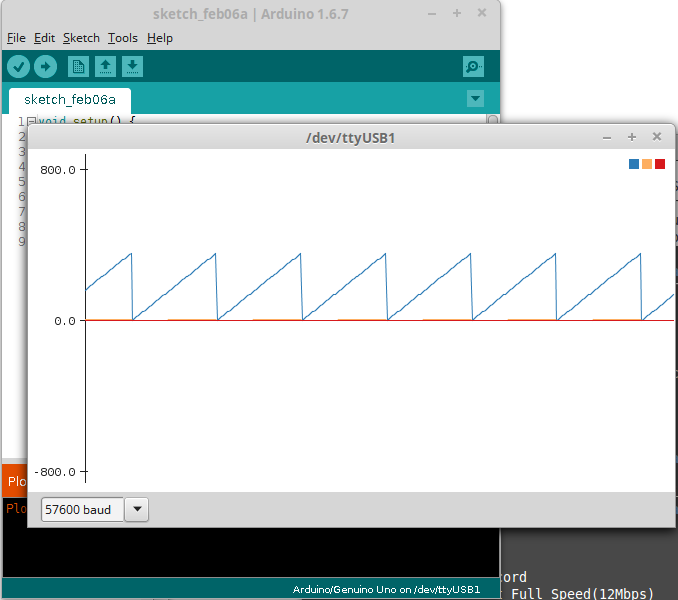

Finally this device can now be used on the desktop system as if it was actually plugged into a local USB port. screen /dev/ttyUSB1 57600 works to view the serial output of this device and it can even be programmed using the Arduino environment. Below is a screenshot of the Arduino serial plotter reading remote data from the OpenWRT USB device.

Once we are finished, sudo usbip detach can disconnect the device. The sudo usbip port command shows details about the currently attached devices, including the vendor id, product id, remote ip and port number.

Conclusion

While this shows some promise, I have found that between these two devices only the serial adapter has worked reliably. A usb stick and a webcam both failed to operate over the usbip link. Hopefully some work has been done on this in more recent kernels. In Raspian for the Raspberry Pi the usbip packages is already setup with the current version of these tools so possibly systems using Linux kernel version 4.x might interoperate more reliably.

The Raspberry Pi Foundation announced a new super low price device yesterday - Rasperry Pi Zero and mine arrived today. I didn't manage to find a copy of the magazine where this was included as a free cover computer!

This needs a micro SD card, a micro usb power adapter capable of providing 160mA and a mini-HDMI plug if you want to connect this to a TV. I intend to use this headless and just hook up a serial console but it seemed sensible to initially start it up with a display.

The SD card I obtained as part of the kit from PiHut had NOOBS setup so this needed selecting using a USB keyboard and the HDMI display and then it went and installed Raspbian for me. To just use a serial console would require installing Raspbian on the card before powering up the Raspberry Pi.

Once the initial boot was complete I connected a USB TTL serial adapter to pins 6, 8 and 10 (GND, TXD, RXD) and started a serial monitor to get logged in. screen /dev/ttyUSB0 115200 and it's up and working. Handilly the serial pins are all on the opposite side from the I2C pins I need to use for a DS3231 real time clock chip so I can attach that to give it an idea of the correct time as well.

Time to hook it up to a robot.

The DS3231 chip is an accurate real time clock with support for battery backed non-volatile RAM to maintain the time while the computer is off. Some very cheap boards are currently available to add one of these to a Raspberry Pi with minimal footprint. However, recent changes to the Linux kernel make this slightly different to enable. Fortunately the most recent distributions now include support for the Device Tree based peripheral support system.

I2C RTC support is already included in the current Raspberry Pi compatible distributions but must be enabled by editing config.txt which is used in the early boot stages to pass configuration parameters to the kernel. On lubuntu or rasbian this file is in /boot but on OpenElec it is found in /flash which is a separate filesystem that needs remounting with read-write permissions. This file only needs a line to enable the i2c device in Device Tree by adding dtoverlay=i2c-rtc,ds3231. Once this is done the next boot will detect the device and automatically make use of it.

If you want to manually add the device without forcing a reboot and set the time then install i2c-tools and check that it is detected:

pat@monitor1:~$ sudo i2cdetect -y 1

0 1 2 3 4 5 6 7 8 9 a b c d e f

00: -- -- -- -- -- -- -- -- -- -- -- -- --

10: -- -- -- -- -- -- -- -- -- -- -- -- -- -- -- --

20: -- -- -- -- -- -- -- -- -- -- -- -- -- -- -- --

30: -- -- -- -- -- -- -- -- -- -- -- -- -- -- -- --

40: -- -- -- -- -- -- -- -- -- -- -- -- -- -- -- --

50: -- -- -- -- -- -- -- -- -- -- -- -- -- -- -- --

60: -- -- -- -- -- -- -- -- 68 -- -- -- -- -- -- --

70: -- -- -- -- -- -- -- --

To then add this as an RTC device the following command enables it in the running system and causes a new /dev/rtc0 device to be created:

sudo su -c 'echo ds3231 0x68 > /sys/class/i2c-adapter/i2c-1/new_device'

Testing this will show the time is set to some meaningless default:

pat@monitor1:~$ sudo hwclock -r Sat 05 Feb 2000 07:45:53 GMT -0.696570 seconds

The system time is currently correct as this system is already running NTP and connected to the network. The hardware clock can therefore be updated from the system clock.

pat@spd-status:~$ sudo hwclock --systohc pat@spd-status:~$ sudo hwclock Thu 13 Aug 2015 12:42:30 BST -0.573334 seconds

Now it should all work properly with the kernel keeping the hardware clock current and reading it on bootup to fix the time in the absence of networking and NTP.

One of my EE co-workers got hold of an Arduino Zero recently but failed to get it working. I took a looks at this and realized that he had walked into the battle of the arduinos and was trying to use the wrong software. Currently Arduino.cc have not released the Zero but Arduino.org have released an Arduino Zero Pro and this is what we have here. To support this they have issued a version of the Arduino IDE labelled as 1.7.2 but which is really a 1.5ish version with Zero support bolted in. Quite poorly as well from a first look.

So having got the device and an IDE capable of programming it, we can try the basic serial output test as shown below. However, it turns out that the included examples will not work as the default Serial class is the wrong one. To get this working Serial5 needs to be used.

#include <Arduino.h>

// for the Arduino Zero

#ifdef __SAMD21G18A__

#define Serial Serial5

#endif

static int n = 0x10;

void setup()

{

Serial.begin(115200);

Serial.println("Serial test - v1.0.0");

}

void loop()

{

Serial.print(n++, HEX);

if (n % 0x10 == 0)

Serial.println("");

else

Serial.print(" ");

if (n > 0xff)

n = 0x10;

delay(30);

}

Moving on I thought it would be interesting to try and use this with a WizNet based Ethernet shield I have. So with the board set as Arduino Zero let us build one of the Ethernet demos.

C:\opt\arduino.org-1.7.2\libraries\Ethernet\src\Ethernet.cpp: In member function 'int EthernetClass::begin(uint8_t*)': C:\opt\arduino.org-1.7.2\libraries\Ethernet\src\Ethernet.cpp:19:7: error: 'class SPIClass' has no member named 'beginTransaction' SPI.beginTransaction(SPI_ETHERNET_SETTINGS);

I sincerely hope arduino.cc do a better job than this when they release their version of this interesting board.

Debugging on the Zero

The ability to use gdb to debug the firmware is what makes this board so attractive. The 1.7.2 IDE package includes a copy of OpenOCD with configuration files for communicating with the Zero. While this is not integrated into the current IDE at all it is still possble to start stepping through the firmware. You do need to know something about the way the Arduino IDE handles building files to make use of this however.

OpenOCD is run as a server that mediates communications with the hardware. The following script makes it simple to launch OpenOCD in a separate window on Windows (adjust the Arduino directory path as appropriate). This gives a console showing any OpenOCD output and two TCP/IP ports will be opened. One on 4444 is for communicating with OpenOCD itself. The other on 3333 is for debugging.

@setlocal @set ARDUINO_DIR=C:\opt\arduino.org-1.7.2 @set OPENOCD_DIR=%ARDUINO_DIR%\hardware\tools\OpenOCD-0.9.0-dev-arduino @start "OpenOCD" %OPENOCD_DIR%\bin\openocd ^ --file %ARDUINO_DIR%\hardware\arduino\samd\variants\arduino_zero\openocd_scripts\arduino_zero.cfg ^ --search %OPENOCD_DIR%\share\openocd\scripts %*

To connect for debugging it is necessary to run a suitable version of gdb and set it to use the remote target provided by OpenOCD on localhost:3333. Provided the sources (the arduino .ino file and any .cpp or .h files included with the project) and the .elf binary are specified gdb can start showing symbolic debugging information. This is where the Arduino IDE needs to provide some assistance. The .ino source file is processed to produce some C++ files and then built with g++ but this all happens in a temporary directory with some relatively random name. Looking for the most recent directory in your temporary directory (%TEMP%) will have the build files. From this folder, running arm-none-eabi-gdb.exe and running the following commands in gdb will enable symbols and start controlling the firmware. After that it is normal gdb debugging.

Note that gdb is found at <ARDUINODIR>\hardware\tools\gcc-arm-none-eabi-4.8.3-2014q1\bin\arm-none-eabi-gdb.exe

# gdb commands... directory SKETCHFOLDER\\PROJECTNAME file PROJECTNAME.cpp.elf target remote localhost:3333 monitor reset halt break setup continue

Worked example

Launching OpenOCD using the script described above.

Open On-Chip Debugger 0.9.0-dev-g1deebff (2015-02-19-15:29)

Licensed under GNU GPL v2

For bug reports, read

http://openocd.sourceforge.net/doc/doxygen/bugs.html

Info : only one transport option; autoselect 'cmsis-dap'

adapter speed: 500 kHz

adapter_nsrst_delay: 100

cortex_m reset_config sysresetreq

Info : CMSIS-DAP: SWD Supported

Info : CMSIS-DAP: JTAG Supported

Info : CMSIS-DAP: Interface Initialised (SWD)

Info : CMSIS-DAP: FW Version = 01.1F.0118

Info : SWCLK/TCK = 1 SWDIO/TMS = 1 TDI = 1 TDO = 1 nTRST = 0 nRESET = 1

Info : DAP_SWJ Sequence (reset: 50+ '1' followed by 0)

Info : CMSIS-DAP: Interface ready

Info : clock speed 500 kHz

Info : IDCODE 0x0bc11477

Info : at91samd21g18.cpu: hardware has 4 breakpoints, 2 watchpoints

Launching gdb

GNU gdb (GNU Tools for ARM Embedded Processors) 7.6.0.20140228-cvs Copyright (C) 2013 Free Software Foundation, Inc. License GPLv3+: GNU GPL version 3 or later <http://gnu.org/licenses/gpl.html> This is free software: you are free to change and redistribute it. There is NO WARRANTY, to the extent permitted by law. Type "show copying" and "show warranty" for details. This GDB was configured as "--host=i686-w64-mingw32 --target=arm-none-eabi". For bug reporting instructions, please see: <http://www.gnu.org/software/gdb/bugs/>. (gdb) file SerialTest1.cpp.elf Reading symbols from C:\Users\pt111992\AppData\Local\Temp\build1861788453707041664.tmp\SerialTest1.cpp.elf...done. (gdb) directory c:\\src\\Arduino\\SerialTest1 Source directories searched: c:\src\Arduino\SerialTest1;$cdir;$cwd (gdb) target remote localhost:3333 Remote debugging using localhost:3333 0x000028b8 in ?? () (gdb) monitor reset halt target state: halted target halted due to debug-request, current mode: Thread xPSR: 0x21000000 pc: 0x000028b8 msp: 0x20002c08 (gdb) break setup Breakpoint 1 at 0x415e: file SerialTest1.ino, line 12. (gdb) continue Continuing. Note: automatically using hardware breakpoints for read-only addresses. Breakpoint 1, setup () at SerialTest1.ino:12 12 Serial.begin(115200); (gdb) next setup () at SerialTest1.ino:13 13 Serial.println("Serial test - v1.0.0"); (gdb)

And now we are stepping through the code actually running on the Arduino Zero. Awesome!

I wanted to setup a monitor showing the status of the jobs in our Jenkins cluster to emphasise the importance of fixing broken builds within the team. This requires some screen to permanently display a web page configured using the Build Monitor Plugin view of a selection of the Jenkins jobs. As we have an old XP machine that has been retired it seemed like an ideal task for a lightweight Linux that boots straight into an X session that just runs a single web-browser task.

I selected lubuntu as a suitable distribution that does not require too much resources (we only have 256MB RAM on this PC). Lubuntu uses the LXDE desktop environment to keep resource usage low but uses the same base and package system as standard Ubuntu which we are already familiar with.

On startup the system defaults to launching lightdm as the X display manager. To configure a crafted session we create a new desktop file (kiosk.desktop) and add a new user account for the kiosk login. We do not need to know the password for this account as lightdm can be configured to autologin as this user by adding the following lines to the /etc/lightdm/lightdm.conf file:

# lines to include in /etc/lightmdm/lightdm.conf [SeatDefaults] autologin-user=kiosk autologin-user-timeout=0 user-session=kiosk

# kiosk.desktop [Desktop Entry] Encoding=UTF-8 Name=Kiosk Mode Comment=Chromium Kiosk Mode Exec=/home/kiosk/kiosk.sh Type=Application

#!/bin/bash # kiosh.sh - session creation /usr/bin/xset s off /usr/bin/xset -dpms /usr/bin/ratpoison & /usr/bin/chromium-browser http://jenkins/view/Status/ --kiosk --incognito --start-maximized /usr/bin/xset s on /usr/bin/xset +dpms

These lines cause the system to automatically log the kiosk user account in and start the kiosk user session which will run the program defined in our kiosk.desktop file. For this all we now need to do is disable the screen-saver and monitor DPMS function and launch chromium to display the correct page. On the first attempt we found that the browser did not fill the screen and while this can be configured it was simpler to add the ratpoison tiling window manager to deal with this.

To avoid wasting power when no-one is around to view this display a cronjob was added to turn the monitor off at night and back on for the working day. The script used below either turns the monitor on and then disables DPMS or turns it off and re-enables the DPMS mode.

#!/bin/sh

# Turn monitor permantly on or off.

DISPLAY=:0.0; export DISPLAY

COOKIE=/var/run/lightdm/root/\:0

function log()

{

logger -p local0.notice -t DPMS $*

}

function dpms_force()

{

xset dpms force $1 && log $1 || log FAILED to set $1

}

if [ $# != 1 ]

then

echo >&2 "usage: dpms on|off|standby|suspend"

exit 1

fi

[ -r $COOKIE ] && xauth merge $COOKIE

case "$1" in

on)

# Turn on and disable the DPMS to keep it on.

dpms_force on

xset -dpms

;;

off|standby|suspend)

# Allow to turn off and leave DPMS enabled.

dpms_force $1

;;

*)

echo >&2 "invalid dpms mode \"$1\": must be on, off, standby or suspend"

exit 1

;;

esac

We have been using GitLab to provide access control and repository management for Git at work. Originally I looked for something to manage the user access and found gitolite looked like best suiting our needs with one exception. Gitolite access is managed by making commits and pushing the gitolite-admin repository which is not especially user friendly. It is easy to automate however and so in searching for a web front end to gitolite I found GitLab.

GitLab is a Rails web application that can handle user account management and repository access for git using gitolite underneath. It has a number of plugins to allow for a selection of authentication mechanism and in a Windows culture we can set it up for LDAP authentication against the local Active Directory servers so that our users can use their normal Windows login details and do not have to remember yet another password. They do however have to create an SSH key and upload it to GitLab but GitExtensions provides a reasonable user interface for dealing with this. In practice we find Windows users can create an account and create repositories with minimal assistance.

However, I find that at least on the server we are using that the git repository browsing from GitLab is quite slow and all repository access requires an account. I have some automated processes that don't really need an SSH key as they are read-only and these are using the git protocol to update their clones. I also like using gitweb for repository browsing.

gitolite has a solution to this that involves using two pseudo-user accounts but GitLab erases these whenever it updates the gitolite configuration file. Further, if we simply go and create the git-daemon-export-ok file that git-daemon uses to test for exported repositories, either GitLab or GitExtensions will delete these on the next configuration update. So in searching for some way around this I found the git-daemon access-hook parameter. This allows git-daemon to call an external program to check for access. If we combine this with the gitweb project file which can be used to git gitweb a specfic set of visible directories then we don't have to hack GitLab itself.

This is achieved by creating a text file that just lists the repository names we wish to be publicly readable via git-daemona and gitweb. Then edit /etc/gitweb.conf and set the $projects_list variable to point to this file.

$projects_list = "/home/git/gitweb.projects"; $export_auth_hook = undef; # give up on the git-daemon-export-ok files.

Next, setup our git-daemon xinetd configuration to call our access hook script.

# /etc/xinetd.d/git-daemon

service git

{

disable = no

type = UNLISTED

port = 9418

socket_type = stream

wait = no

user = git

server = /usr/local/bin/git

server_args = daemon --inetd --base-path=/home/git/repositories --export-all --access-hook=/home/git/git-daemon-access.sh /home/git/repositories

log_on_failure += USERID

}

After a bit of experimentation I found that you need to --export-all as well as have the access-hook.

Finally, the access hook script.

#!/bin/bash

#

# Called up from git-daemon to check for access to a given repository.

# We check that the repo is listed in the gitweb.projects file as these

# are the repos we consider publically readable.

#

# Args are: service-name repo-path hostname canonical-hostname ipaddr port

# To deny, exit with non-zero status

logger -p daemon.debug -t git-daemon "access: $*"

[ -r /home/git/gitweb.projects ] \

&& /bin/grep -q -E "^${2#/home/git/repositories/}(\\.git)?\$" /home/git/gitweb.projects

Here we log access attempts and fail if the gitweb.projects file is missing. Then trim off the base-path prefix and check for the repository name being listed in the file.

Now I can have GitLab and git-daemon and gitweb available.

In the future it might be nice to use this mechanism to have GitLab provide some control of this from it's user interface. That way I will avoid having to occasionally update this project file. However, thats something for another day - especially as the most recent release of GitLab has dropped the gitolite back-end in favour of an internal package (git-shell). I'm waiting to see how that turns out.

Recently I have been persuading my co-workers that we should be using Git instead of CVS. To help this along I've spent some time trying to ensure that any switch over will be as painless as I can. At the moment they are using TortoiseCVS on the workstations with the CVS server being CVSNT on a Windows machine. This is a convenient setup on a Windows domain as we can use the NT domain authentication details to handle access control without anyone needing to remember specific passwords for CVS. The repositories are just accessed using the :sspi:MACHINE:/cvsroot path and it all works fine.

One thing we missed about CVS even when we originally switched to it was that it was hard to tell what set of files had been committed together. CVSNT adds a changeset id to help with this and also can be configured to record an audit log to a mysql database. So a few years ago we made an in-house web app that shows a changeset view of the CVS repository and helps us review new commits and do changeset merges from one branch to another using the cvsnt extended syntax (cvs update -j "@<COMMITID" -j "@COMMITID"). So if they are to switch we must ensure the same facilities continue to be available. We also need the history converted but thats the easy part. Using cvs2git. I've already been running a git mirror of the cvs repository for over a year by converting twice a day and then pushing the new conversion onto the mirror. It is done this way as cvsimport got some things wrong and cvs2git doesn't do incremental imports. However, performing a full conversion is about 40mins processing and pushing the new repository onto the old one helps to show up any problems quickly (like the time one dev worked out how to edit the cvs commit comments for old commits).

So we need a nice changeset viewing tool, access control - preferably seamless with Windows domains, and simple creation of new repositories. The first is the simplest. gitweb is included with git and provides a nice view of any repositories you feed it. Initially I found it a bit slow on my Linux server with Apache, but switching from CGI to Fast-CGI has sorted this out. In case this helps I had to install libapache2-mod-fastcgi, libcgi-fast-perl and libfcgi-perl. Then added the following to /etc/apache2/conf.d/gitweb. Supposedly this can run under mod_perl but I failed to make that work. The fast-cgi setup is performing well though.

# gitweb.fcgi is just a link to gitweb.cgi ScriptAlias /gitweb /usr/local/share/gitweb/gitweb.fcgi <Location /usr/local/share/gitweb/gitweb.fcgi> SetHandler fastcgi-script Options +ExecCGI </Location>

Next we need access control. The best-of-breed for this appears to be gitolite and it does a fine job. This uses ssh keys to authenticate developers for repository access and means there is only a single unix user account required. It also permits access control down to individual branches which may be quite useful. The way this is configured is by pushing committed changes to an administrative git repository. I can see this not being taken so favourably by my fellow developers although it is very powerful. So I thought I might need some kind of web UI for gitolite and discovered GitLab. This fills the gap very nicely by sitting on top of gitolite and giving a simple method to create new repositories and control of the access policy. If we need finder control than is provided by gitlab, then we can still use the gitolite features directly.

Setting up gitlab on a Ubuntu 10.04 LTS server was a minor pain. Gitlab is a Ruby-on-Rails application and these kind of things appear to enjoy using the cutting edge releases of everything. However, the ubuntu server apt repositories are not keeping up so for Ruby, it is best to compile everything locally and give up on apt-get. Following the instructions it was relatively simple to get gitlab operating on our server. It really does need the umask changing as mentioned though. I moved some repositories into it by creating them in gitlab then using 'push --mirror' to load the git repositories in. The latest version supports LDAP logins so once configured it is now possible to use the NT domain logins to access the gitlab account. From there, developers can load up an ssh key generated using either git-gui or gitextensions, create new repositories and push.

With gitlab operating fine as a standalone rails application it needed to be integrated with the Apache server. It seems Rails people like Nginx and other servers - however, Apache can host Rails applications fine using Passenger. This was very simple to install and getting the app hosted was no trouble. There is a problem if you host Gitlab under a sub-uri on your server. In this case LDAP logins fail to return the authenticated user to the correct location. So possibly it will be best to host the application under a sub-domain but at the moment I'm sticking with a sub-uri and trying to isolate the fault. My /etc/apache2/conf.d/gitlab file:

# Link up gitlab using Passenger Alias /gitlab/ /home/gitlab/gitlabhq/ Alias /gitlab /home/gitlab/gitlabhq/ RackBaseURI /gitlab <Directory /home/gitlab/gitlabhq/> Allow from all Options -MultiViews </Directory>

Now there are no excuses left. Lets hope this keeps them from turning to TFS!

A recently merged commit to the CyanogenMod tree caught my eye recently - Change I5be9bd4b: bluetooth networking (PAN). Bluetooth Personal Area Networking permits tethering over bluetooth. Now this is something that will allow me to tether a Wifi Xoom to my phone when I'm someplace without wifi. Unfortunately, while this is in the CM tree - we need some additional kernel support for the Nexus S. The prebuilt kernel provided with CM 7.1.0 RC1 doesn't include BNEP support which we need to complete this feature. So lets build a kernel module.

The Nexus S kernel is maintained at android.git.kernel.org but I notice there is also a cyanogenMod fork on github as well. So to begin we can clone these and see what differences exist between the stock kernel and the CM version. To setup the repository:

mkdir kernel && cd kernel git clone git://android.git.kernel.org/kernel/samsung.git samsung cd samsung git remote add cm git://github.com/CyanogenMod/samsung-kernel-crespo.git git remote update git log --oneline origin/android-samsung-2.6.35..cm/android-samsung-2.6.35 f288739 update herring_defconfig

The only changes between the samsung repository and the CM repository are a single commit changing the configuration. This has evidently been done using the kernel configuration utility so its a bit hard to work out the changes by comparing the config files directly. However, if I use each config in turn and regenerate a new one via the kernel configuration utility I can then extract just the changes.

git cat-file blob cm/android-samsung-2.6.35^:arch/arm/configs/herring_defconfig > x_prev.config git cat-file blob cm/android-samsung-2.6.35:arch/arm/configs/herring_defconfig > x_cm.config Then make gconfig and load each one, saving to a new file. diff -u z_prev.config z_cm.configand we can see that the new settings are just:

CONFIG_SLOW_WORK=y CONFIG_TUN=y CONFIG_CIFS=y CONFIG_CIFS_STATS=y CONFIG_CIFS_STATS2=y CONFIG_CIFS_WEAK_PW_HASH=y CONFIG_CIFS_XATTR=y CONFIG_CIFS_POSIX=y

In current versions of Linux the modules retain version information that includes the git commit id of the kernel source used to build them. This is also present in the kernel and the kernel will reject a module with the wrong id. So to make a module that will load into the currently running kernel I need to checkout that version - simple enough as it is the current head-but-one of the cm-samsung-kernel repository (e382d80). Adding CONFIG_BNEP=m to the kernel config file enables building BNEP support as a module and taking the HEAD herring_defconfig and building HEAD^ results in a compatible bnep.ko module.

To test this I copied the module onto the device and restarted the PAN service.

% adb push bnep.ko /data/local/tmp/bnep.ko % adb shell # insmod /data/local/tmp/bnep.ko # ndc pan stop # ndc pan start # exitWith this done we can try it out. The Blueman app on ubuntu lets me rescan the services on a device and following the above changes the context menu for my device now shows Network Access Point. Selecting this results in a bluetooth tethering icon on the Nexus S and we are away. Further checking on the Xoom with Wifi disabled proves that it all works. Routing is properly configured and the Xoom can access the internet via the phone.

To make this permanent, I could just remount the phone system partition read-write and edit /system/etc/init.d/04modules to add my new module on restart. That works ok. However, I may as well add the configuration changes from above to the current samsung stock kernel and change the kernel in use when I re-build a CyanogenMod image. So that is what I am running now.

Moving Python packages out of the Windows Roaming profile

On my work machine it has recently been taking quite a while to start-up when it is first turned on. This tends to mean there is too much data in the corporate roaming profile so I started to look into this. I recently installed ActivePython and added a few packages using the python package manager (pypm). This has dumped 150MB into my Roaming profile. This is the wrong place for this stuff on Windows 7. It should be in LOCALAPPDATA which would restrict it to just this machine (where I have Python installed) and not get copied around when logging in and out.

A search points up PEP 370 as being responsible for this design and this document suggests how to move the location. In the specific case of ActivePython the packages are stored in %APPDATA%\Python so we need to set the PYTHONUSERBASE environment variable to %LOCALAPPDATA%\Python. Editing the environment using the Windows environment dialog and using %LOCALAPPDATA% doesn't work correctly as the variable does not get expanded. However, we can set it to %USERPROFILE%\Local\Python which is expanded properly and produces right result.

Once the environment is setup we can move the package directory from %APPDATA%\Python to %LOCALAPPDATA%\Python and check everything is still available:

C:\Users\pat> pypm info PyPM 1.3.1 (ActivePython 2.7.1.4) Installation target: "~\AppData\Local\Python" (2.7) (type "pypm info --full" for detailed information) C:\Users\pat>pypm list numpy 1.6.0 NumPy: array processing for numbers, strings, records, a pil 1.1.7~2 Python Imaging Library xmpppy 0.5.0rc1 XMPP-IM-compliant library for jabber instant messaging.